On my module in materials development we’ve just looked at reading and listening tasks.

We spoke about what makes good/bad comprehension questions. ‘Plain sense’ questions are seen as pretty ineffective, as they just test familiarity with sentence structure rather than actual understanding. Here’s an example of a plain sense question that I came across on my Dip (at TLI)

Most zins are bosticulous. Many rimp upon pilfides.

Q1. What are most zins?

Q2. What do many rimp on?

Although the text is full of nonsense words you can answer the questions without really understanding it.

I can see why these questions aren’t considered that useful. So why do coursebook writers fall back on them?

I know, it’s really easy to be critical. I’m guilty of writing rubbish questions like this too. Actually, I don’t do it that often. I prefer to write really ambiguous questions which will prompt conversation/debate – these are equally annoying I think!

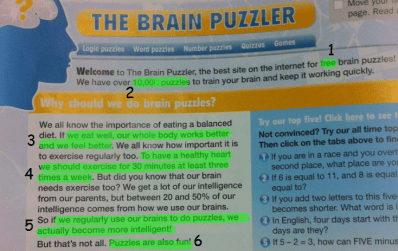

But these ‘plain sense’ questions… I mean… I came across this activity the other day:

these are from Beyond A2+ (copyright Macmillan), which is actually quite a good coursebook.

Here’s the text, with relevant parts highlighted for each answer:

I’m not saying the whole activity is pointless. It’s just that some questions are plain sense or focus on simple grammatical relationships.

Q4: To have a healthy heart, how often do we need to exercise?

A: (To have a healthy heart, we should exercise for) 30 minutes at least three times a week

Q5: What happens if we do puzzles?

A: (if we regularly use our brains to do puzzles), we actually become more intelligent

I could give the author the benefit of the doubt I guess. For example, you can still teach some reading strategies related to the questions. In Q4 students could predict the answer based on question stems (e.g. How often = a frequency), then scan the text quickly for the relevant info – if they didn’t already have a massive clue by being given the start of the sentence. Maybe Q5 draws attention to the word ‘intelligent’ as new vocabulary, but you don’t need to know the meaning to answer the comprehension question.

I’m not suggesting how these questions could be improved. That’s not because I’m lazy. It’s because when they were devised they must have been written by a far more experienced teacher and then accepted by a skilled editor, both of whom must have had a clear pedagogical rationale for choosing these questions.

Categories: General, reflections

I suppose it’s easier to write plain sense questions and justify level mapping than it is to write questions that actually prompt inquiry, which might rely extra-textual resources – always a problem for those who want everything to fit into a nice little box.

LikeLiked by 1 person

Yeah true. I can see that writers must have a lot of restraints. Still though, the level is A2+. I’d like to see less plain sense Qs by that level. Just preference I guess…

LikeLike

Plain sense questions do my nut in. I guess at beginner and elementary, they maybe useful to build confidence but beyond that they are useless. Of course, I can write my own questions but isn’t that why we bought the course book?

LikeLiked by 1 person

You’d think so. There are a lot of valid criticisms for coursebooks and many overriding issues that I often, due to context, turn a blind eye to. But when you can’t get the simple things right…!

LikeLike

Hiya.

I think you’re *wrong* to not think of better ways. Who is to say that the writer’s and editor’s rationale is right?

Personally, I’d be tempted toward a global question with parameters, like “What points were interesting? Choose your favourite and explain why.”

There are too many unthreatening coursebook questions that provide low-hanging fruit but don’t really motivate learners to try much harder than superficial understanding.

Also, are learner-generated questions more authentic and likely to lead to what learners need?

LikeLike

I was just being diplomatic. My personal view on it is that the author/writer have made poor choices here. I can rectify this in my practice by writing questions that better engage my learners and can develop their reading skills/strategies further. So all is not lost!

Your global question sounds good. It wouldn’t work in my context, but neither would the coursebook questions themselves, so that tells us a bit about materials development – keep it localised and personalised I guess…

Interested in your comment about learner-generated questions. How would you go about that and how would you ensure it addresses learner needs?

LikeLike

I’d go with a task after reading that’s so simple and authentic. “What’s one question you have about the text for other class members?” It’s a real reason for re-reading and isn’t some pre- destined nonsense. If the learners are simply going through the motions, what’s the point in the text, either. If you want to know how well a text is understood, ask for summaries.

If the text is there to present linguistic items, have learners search for these for homework unless you have very text-specific content to look at.

Cheers!

LikeLike